linprog failed to work. why? Did I do something wrong?

John D'Errico

on 10 Nov 2022

Latest activity Reply by John D'Errico

on 10 Nov 2022

I've now seen linear programming questions pop up on Answers recently, with some common failure modes for linprog that people seem not to understand.

One basic failure mode is an infeasible problem. What does this mean, and can it be resolved?

The most common failure mode seems to be a unbounded problem. What does this mean? How can it be avoided/solved/fixed? Is there some direction I can move where the objective obviously grows without bounds towards +/- inf?

Finally, I also see questions where someone wants the tool to produce all possible solutions.

A truly good exposition about linear programming would probably result in a complete course on the subject, and Aswers is limited in how much I can write (plus I'll only have a finite amount of energy to keep writing.) I'll try to answer each sub-question as separate answers, but if someone else would like to offer their own take, feel free to do so as an answer, since it has been many years for me since I learned linear programming.

3 Comments

Time Descending

Sign in to participate

Bounded versus unbounded problems

This is perhaps the most difficult sub-question to answer, partly because it will force me to discuss things like the dual to a linear programming problem.

What does an unbounded linear programming problem mean? That is, when linprog gets upset, and tells you the problem is unbounded, what is it teling you? Perhaps the best way to show this, is with an obviously unbounded problem. We need only one variable. Thus

minimize x

subject a simple bound constraint, x <= 2

Clearly, since x has only an upper bound, we can decrease x as far as we wish. As x goes to -inf, the objective also goes to -inf. See what linprog tells us.

[X,FVAL,EXITFLAG,OUTPUT] = linprog(1,[],[],[],[],[],2)

Linprog returns no solution, though you might argue it could have chosen to return -inf for both X and FVAL. The exitflag of -3 also tells us that fact.

Most linear programming problems are far more difficult to know they are unbounded just by looking at the problem at a glance. In 2 dimensions things are still moderately simple, since you can always plot things. Even in 3-d, you can still try plotting the constraint planes to visualize the problem. Go beyond 3 dimensions, and you may be in more trouble. Remember that the constraints of a linear programming problem will form a polytope. It will always be a convex domain, but it may extend without bound. Of course, that may not be a problem. Even in the simple case above with one variable, linprog will have no problem maximizing x in that one variable problem. So problems on an infinite domain are not always unbounded optimization problems.

I'll repeat the above example to show that linprog has no problem even on an unbounded half line, as long as the objective points the correct way. (This time, using a problem based formulation.)

prob = optimproblem('Description','Bounded example');

x = optimvar('x',[1,1],'lower',2);

prob.ObjectiveSense = 'minimize';

prob.Objective = x(1);

solve(prob)

In 2-d, consider this next moderately simple problem as another example:

Maximize x1 + x2

x1 >= 0, x2 >= 0

x1 + x2 >= 4

2*x1 + x2 >= 5

x1 + 3*x2 >= 6

Again, we should guess this will not have a solution, as an unbounded problem.

prob = optimproblem('Description','Unbounded 2-d example');

x = optimvar('x',[1,2],'lower',0);

prob.ObjectiveSense = 'maximize';

prob.Objective = x(1) + x(2);

prob.Constraints.Con1 = x(1) + x(2) >= 4;

prob.Constraints.Con2 = 2*x(1) + x(2) >= 5;

prob.Constraints.Con3 = x(1) + 3*x(2) >= 6;

solve(prob)

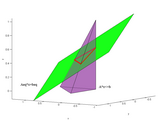

I'll plot the 2-d problem, as a set of half planes using a tool I wrote for this purpose.

plothalfplane([1 1],4,[0,10],[0,10])

plothalfplane([2 1],5,[],[],gcf)

plothalfplane([1 3],6,[],[],gcf)

Here we see the three constraint planes. The overlap region is what we care about. But if the goal of the linear programming problem is to MAXIMIIZE x1+x2, then we can arbitrarily grow the objective as large as we wish, as long as we stay in the triply shaded region, extending out to infinity.

See how this changes, if we modify the problem in one of two way. FOr example, suppose we dicided to minimize that sum?

prob.ObjectiveSense = 'minimize';

solve(prob)

Linprog had no difficulty there. Or, suppose we still maximize, but we now add another constraint?

prob.ObjectiveSense = 'maximize';

prob.Constraints.Con4 = 4*x(1) + 3*x(2) <= 30;

xsol = solve(prob)

plothalfplane([1 1],4,[0,10],[0,10],figure)

plothalfplane([2 1],5,[],[],gcf)

plothalfplane([1 3],6,[],[],gcf)

plothalfplane([-4 -3],-30,[],[],gcf)

hold on

plot(xsol.x(1),xsol.x(2),'rx')

hold off

The solution this time was found at a vertex of the now bounded polytope, represented as the now quadruply shaded region.

By now, I hope you have some understanding of what unbounded means. It tells us there is SOME direction we can move out as far as we want, and continuously improve the objective as much as we want to go.

Can we always know in advance if there will be a problem? As we saw, even if the domain is unbounded, the LP problem may still have a finite solution. A simple answer of course is just to trust linprog, as why would it lie? While the examples we see above are what I would call trivially easy to visualize by inspection, suppose we have some 4 or 10 variable problem? A second question is, if we can easily identify SOME direction where we can move that will show the problem to be unbounded as we move out along some linear path. (An unbounded solution must ALWAYS have some straight line path that extends to infinity, where the objective can be seen to grow infinitely large as we follow that path.)

To go more deeply into this question, now we will need to understand the dual of a linear programming problem. But, as I peruse what I just wrote, I think this will probably need another answer, as I recall there is a limit to the size of any one answer. So, onto my 4th answer to this question. (writing in progress...)

All solutions:

In general, when someone wants to see all possible solutions to a linear programming problem or integer linear programming problem, they are probably asking the wrong question, wanting to use linprog to do some work it was not designed to do. linprog/intlinprog are designed to solve a problem, producing ONE solution. They really don't care if a second solution exists that is equally good.

If a LP has a feasible solution, then since the objective is linear, the solution can always be improved until the solution lies either at a vertex of the polytope containing all feasible solutions, or more rarely, until the solution lies on a boundary. In the latter case, they will be infinitely many solutions, since any point that lies between two admissable vertices will also be equally valid as a solution. This is a consequence of a linear objective on a convex bounded domain. (Of course things get more nasty when integer solutions are demanded, but I'll leave that discussion to later.)

For example, suppose I pose a simple linear programming problem, where a constraint happes to lie parallel to the objective.

>> X = optimvar('X',2,1,'LowerBound',0);

>> prob = optimproblem('Description','Infinitely many solutions');

>> prob.Objective = X(1) + X(2);

>> prob.Constraints.MyConstraint1 = X(1) + X(2) >= 1;

sol = solve(prob)

Solving problem using linprog.

Optimal solution found.

sol =

struct with fields:

X: [2×1 double]

>> sol.X

ans =

0

1

So we find linprog returns ONE solution, at the point [0,1]. This is one vertex of the polytope. (In this case, I was lazy, and the polytope is actually unbounded, but still a solution exits, because I am minimizing the objective.) Yet we should also know the vertex [1 0] is also a solution. However, linprog does not care the least bit. It did not even complain, not notifying us that other solutions also exist, or for that matter, INFINITELY other solutions exist that are equally valid. So [1/2,1/2] is also an equally valid solution.

Of course, linprog/intlinprog cannot be designed to return infinitely many solutions, and your computer does not have the memory to store infinitely many solutions anyway. So really, those who want to see ALL possible solutions are trying to use the wrong tool to solve the problem.

One idea might be to identify the set of vertices of the convex polytope that solve the problem. Some useful tools for this can be found on the file exchange:

Given the set of al vertices of the convex polyheddron representing your contraints, now evaluate the objective function at each vertex, and choose all of them that have the same optimum value, in case there is more than one. The set of all solutions to the linear programming problem that optimize it will lie on the line or polytope that connects the vertices identified. So if two points all have the same minimum objective value, then any point along the line segment connecting those vertices will solve the problem too. If there are three such vertices, then all points in the triangle bounded by those points will be solutions.

In the case of an integer programming problem, this all gets more complex yet, since now we are almost moving into the domain of Diophantine equations. But really, if you want to see all integer solutions to a problem, you may be forced to identify them using some exhaustive search method. I often suggest that you may be trying to solve the wrong problem, or perhaps trying to solve a problem in the wrong way when an exhaustive brute force search is needed. But that is your problem, not mine. Anyway, again, you will need to use other tools than intlinprog to solve the problem.

For example, suppose you wanted to find all integer solutions to the problem

min x+y+z

x + y + z = 100

(x,y,z) >= 1

This reduces to the problem of finding all partitions of the number 100, into a sum of three positive integers. My partitions function (found on the file exchange for download) can return the set of all 833 such ways to perform that sum.

>> size(partitions(100,1:100,3,3),1)

ans =

833

The point is, if you really need the set of all possible solutions to a linear programming problem, you need to use some tool other than linprog/intlinprog to solve the problem.

Analyze N-dimensional Convex Polyhedra

Find vertex or (in)equality forms of convex polyhedra in R^n (for n not super large). Also, compute their intersections and unions.

Feasibility, infeasibility

If linprog tells you the problem is infeasible, that means it could not find a solution that satisfies ALL constraints and bounds, equalities, etc.

I'll pretty mugh ignore the case of equality constraints here, since we can always use them to project a problem with equality constraints into a lower dimensional subspace, but where there are now no equality constraints. (The projection to a lower dimensional subspace will generally convert bound constraints into inequality constraints. But that is not important in this context.)

But what does it mean to be infeasible? Infeasible tells you that your problem represents a contradiction in some form. For example, suppose I asked linprog to solve the problem

minimize x

subject to the constraints that x >= 2 AND x <= 1

Does any solution exist? Of course not, since the feasible set of solutions is empty.

f = 1;

A = [-1;1];

b = [-2;1];

linprog(f,A,b)

Infeasibility is really no more complicated than that. Something in your constraints represents a contradiction. Yes, in higher dimensions, this may be far more complicated to visualize, but in essence, the constraints you have chosen cause the problem to have no solution that satisfies all of them.

Infeasibility might mean you have specified the problem incorrectly. I'd guess that is the most common reason for such a failure. So go back, and check the problem derivation. Insure you have done the mathematics properly, first to specify the problem in terms of mathematics, but then to convert that to a set of constraints, bounds, and an objective.

If it is clear you have done the mathematics correctly, and have all the matrices for the constraints correctly specified, etc., then an infeasible problem means there is a problem in the constraints. They are too constrictive in some sense. Then the solution is simple in concept (though not always simple to resolve.) In some way, you need to relax one more more of the constraints, sufficiently that the feasible set becomes non-empty. In the case of an integer linear programming problem, the feasible set must include at least one integer solution, so that might take a little more relaxation.